As you know from holiday post-cards, I spent time recently with Emily and Joe in Salt Lake City. They were full, rich, activating days and I’m still sorting through the many gifts I received.

The immensity of the mountains in their corner of Utah was impossible to ignore, though I wasn’t there to ski them as much as to gaze-up in wonder at their snow-spattered crowns for the first time. Endless, low-level suburbs also extend to their foothills in every direction, and while everyone says “It’s beautiful here” they surely mean “the looking up” and not “the looking down.”

Throughout my visit, SLC’s sprawl was a reminder of how far our built-environments fall short of our natural ones in a freedom-loving America that disdains anyone’s guidance on what to build and where. Those who settled here have filled this impossibly majestic valley with an aimless, carmel-and-rosy low-rise jumble that from any elevation has a fog-topping of exhaust in the winter and (most likely) a mirage-y shimmer of vaporous particulates the rest of the year.

So much for Luke 12:48 (“To whom much has been given….”)

Why not extrapolations of the original frontier towns I wondered instead of this undulating wave of discount centers, strip malls, and low-slung industrial and residential parking lots that have taken them over in every direction?

I suppose they’re the tangible manifestation of resistance to governmental guidance (or really any kind of collective deliberation) on what we can or can’t, should or shouldn’t be doing when we create our homelands—leaving it to “the quick buck” instead of any consciously-developed, long-term vision to determine what surrounds us.

Unfortunately I fear that this same deference to freedom (or perhaps more aptly, to its “free-market forces”) may be just as inevitable when it comes to artificial intelligence (or AI). So I have to ask: Instead of making far far less than we could from AI’s similarly awesome possibilities, why not commit to harnessing (and then nurturing) this breathtaking technology so we can achieve the fullest measure of its human-serving potential?

Unfortunately, my wider days beneath a dazzle of mountain ranges showed me how impoverished this end game could also become.

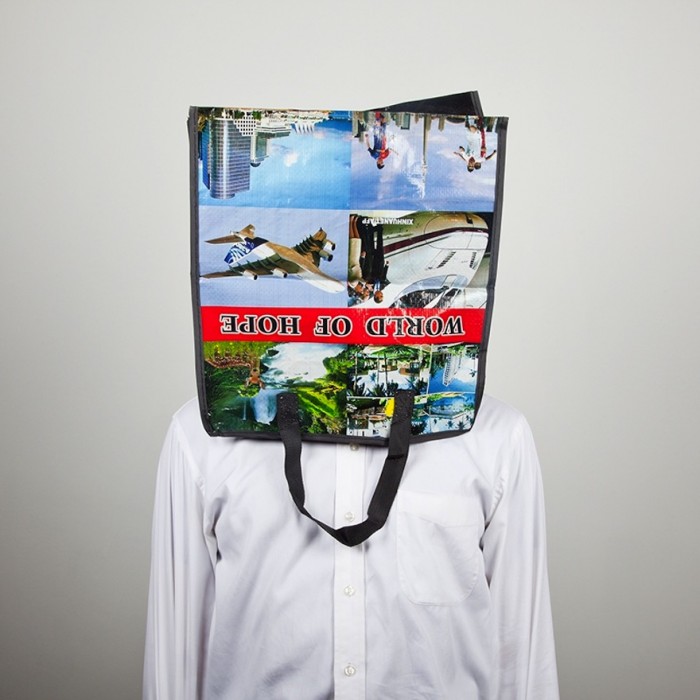

Boris Eldagsen submitted this image, called “Pseudomnesia: The Electrician” to a recent, Sony world photography competition. When he won in the contest’s “creative open” category, he revealed that his image was AI-generated, going on to donate his prize money to charity. As Eldagsen said at the time: “Is the umbrella of photography large enough to invite AI images to enter—or would that be a mistake? With my refusal of the award, I hope to speed up this debate” about what is “real” and “acceptable” in the art world and what is not.

The debate over that and similar questions should probably begin with a summary appreciation of AI’s nearly-miraculous as well as fearsomely-catastrophic possibilities. Both were given a preview in a short interview with the so-called “Godfather of AI,” Geoffrey Hinton, on the 60 Minutes TV-newsmagazine a couple of months ago.

Looking a bit like Dobby from the Harry Potter films, Hinton’s sense of calm and perspective offered as compelling a story as I’ve heard about the potential up- and down-sides of “the artificial neural networks” that he first helped to assemble five decades ago. Here are a few of its highlights:

- after the inevitable rise of AI, humans will become the second most intelligent beings on Earth. For instance, in 5 years Hinton expects “that ChatGPT might well be able to reason better than us”;

- AI systems can already understand. For example, even with the autocomplete features that interrupt us whenever we’re texting and emailing, the artificial intelligence that drives them has to “understand” what we’ve already typed as well as what we’re likely to add in order to offer up its suggestions;

- Through trial and error, AI systems learn as they go (i.e. machine learning) so that the system’s “next” guess or recommendation is likely to be more accurate (or closer to what the user is looking for) than its “last” response. That means AI systems can improve their functioning without additional human intervention. Among other things, this capacity gives rise to fears that AI systems could gain certain advantages over or even come to dominate their human creators more generally as they continue to get smarter.

- Hinton is proud of his contributions to AI-system development, especially the opportunities it opens in health care and in developing new drug-treatment protocols. But in addition to AI’s dominating its creators, he also fears for the millions of workers “who will no longer be valued” when AI systems take over their jobs, the even broader dissemination of “fake news” that will be turbo-charged by AI, as well as the use of AI-enabled warriors on tomorrow’s battlefields. Because of the speed of system advancements, he urges global leaders to face these challenges sooner rather than later.

Finally, Hinton argues for broader experimentation and regulation of AI outside of the tech giants (like Microsoft, Meta and Google). Why? Because these companies’ primary interest is in monetizing a world-changing technology instead of maximizing its potential benefits for the sake of humanity. As you undoubtedly know, over the past two years many in the scientific-research, public-policy and governance communities have echoed Hinton’s concerns in widely-publicized “open letters” raising alarm over AI’s commercialization today.

Hinton’s to-do list is daunting, particularly at a time when many societies (including ours) are becoming more polarized over what constitutes “our common goods.” Maybe identifying a lodestar we could all aim for eagerly–like capitalizing on the known and (as yet unknown) promises of AI that can benefit us most–might help us to find some agreement as the bounty begins to materialize and we begin to wonder how to “spend” it. Seeing a bold and vivid future ahead of us (instead of merely the slog that comes from risk mitigation) might give us the momentum we lack today to start making more out of AI’s spectacular frontier instead of less.

So what are the most thoughtful among us recommending in these regards? Because, once again, it will be easier to limit some of our freedoms around a new technology with tools like government regulation and oversight if we can also envision something that truly dazzles us at the end of the long, domesticating road.

Over the past several months, I’ve been following the conversation—alarm bells, recommended next steps, more alarm bells—pretty closely and it’s easy to get lost in the emotional appeals and conflicting agendas. So I was drawn this week to the call-to-action in a short essay entitled “Why the U.S. Needs a Moonshot Mentality for AI—Led by the Public Sector.” Its engaging appeal, co-authored by Fei-Fei Li and John Etchemendy at the Stanford Institute for Human-Centered Artificial Intelligence, is the most succinct and persuasive one I’ve encountered on what we should be doing now (and encouraging others with influence to be doing) if we want to shower ourselves with the full range of AI’s benefits while minimizing its risks.

Their essay begins with a review of the nascent legislative efforts that are currently underway in Congress to place reasonable guardrails around the most apparent of AI’s misguided uses. A democratic government’s most essential function is to protect its citizens from those things (like foreign enemies during wartime) that only it can protect us from. AI poses that category of individual and national threat in terms of spreading disinformation, and the authors urge quick action on some combination of the pending legislative proposals.

Li and Etchemendy then talk about the parties that are largely missing from the research labs where AI is currently being developed.

As we’ve done this work, we have seen firsthand the growing gap in the capabilities of, and investment in, the public compared with private sectors when it comes to AI. As it stands now, academia and the public sector lack the computing power and resources necessary to achieve cutting edge breakthroughs in the application of AI.

This leaves the frontiers of AI solely in the hands of the most resourced players—industry and, in particular, Big Tech—and risks a brain drain from academia. Last year alone, less than 4o% of new Ph.D.s in AI went into academia and only 1% went into government jobs.

The authors are also justifiably concerned by the fact that policy makers in Washington have been listening, almost exclusively, to commercial AI developers like Sam Altman and Elon Musk and not enough to leaders from the academy and civil society. They are, if anything, even more outraged by the fact that “America’s longstanding history of creating public goods through science and technology” (think of innovations like the internet, GPS, MRIs) will be drowned out by the “increasingly hyperbolic rhetoric” that’s been coming out of the mouths of some “celebrity Silicon Valley CEOs” in recent memory.

They readily admit that “there’s nothing wrong with” corporations seeking profits from AI. The central problem is that those who might approach the technology “from a different [non-commercial] angle [simply] don’t have the [massive] computing power and resources to pursue their visions” that the profit-driven have today. It’s almost as if Li and Etchemendy want to level the playing field and introduce some competition between Big Tech and those who are interested (but currently at a disadvantage) in the academy and the public sector over who will be the first to produce the most significant “public goods” from AI.

Toward that end:

We also encourage an investment in human capital to bring more talent to the U.S. to work in the field of AI within academia and the government.

[W]hy does this matter? Because this technology isn’t just good for optimizing ad revenue for technology companies, but can fuel the next generation of scientific discovery, ranging from nuclear fusion to curing cancer.

Furthermore, to truly understand this technology, including its sometimes unpredictable emergent capabilities and behaviors, public-sector researchers urgently need to replicate and examine the under-the-hood architecture of these models. That’s why government research labs need to take a larger role in AI.

And last (but not least), government agencies (such as the National Institute of Standards and Technology) and academic institutions should play a leading role in providing trustworthy assessments and benchmarking of these advanced technologies, so the American public has a trusted source to learn what they can and can’t do. Big tech companies can’t be left to govern themselves, and it’s critical there is an outside body checking their progress.

Only the federal government can “galvanize the broad investment in AI” that produces a level-playing field where researchers within our academies and governmental bodies can compete with the brain trusts within our tech companies to produce the full harvest of public goods from a field like AI. In their eyes it will take competitive juices (like those unleashed by Sputnik which took America to the moon a little more than a decade later) to achieve AI’s true promise.

If their argument peaks your interest like it did mine, there is a great deal of additional information on the Stanford Institute site where the authors profile their work and that of their colleagues. It includes a three-week, on-line program called AI4ALL where those who are eager to learn more can immerse themselves in lectures, hands-on research projects and mentoring activities; a description of the “Congressional bootcamp,” offered to representatives and their staffs last August and likely to be offered again; and the Institute’s white paper on building “a national AI resource” that will provide academic and non-profit researchers with the computing power and government datasets needed for both education and research.

To similar effect, I also recommend this June 12, 2023 essay in Foreign Policy. It covers some of the same territory as these Stanford researchers and similarly urges legislators to begin to “reframe the AI debate from one about public regulation to one about public development.”

It doesn’t take much to create a viral sensation, but when they were published these AI-generated images certainly created one. Here’s the short story behind “Alligator-Pow” and “-Pizza.” At some point in the future, we could look back to the olden days when AI’s primary contributions were to make us laugh or to help us to finish our text messages.

Because we’ll (hopefully) be reminiscing in a future when AI’s bounty has already changed us in far more profound and life-affirming ways.

If the waves of settlers in Salt Lake City had believed that they could build something that aspired to the grandeur of the mountains around them—like the cathedrals of the Middle Ages or even some continuation of the lovely and livable villages that many of them had left behind in Northern Europe—they might not have “paved Paradise and put up a parking lot” (as one of their California neighbors once sang).

In similar ways, having a worthy vision today, and one that’s realized by the right gathering of competitors, could make the necessary difference when it comes to artificial intelligence?

So will we domesticate AI in time?

Only if we can gain enough vision to take us over the “risk” and “opportunity” hurdles that are inhibiting us today.

This post was adapted from my January 7, 2024 newsletter. Newsletters are delivered to subscribers’ in-boxes every Sunday morning, and sometimes I post the content from one of them here. You can subscribe (and not miss any) by leaving your email address in the column to the right.